Building an AI powered website search with Retrieval-Augmented Generation (RAG).

AI on its own doesn’t “know” your website. But with RAG, it can retrieve, prioritize, and summarize your exact content.

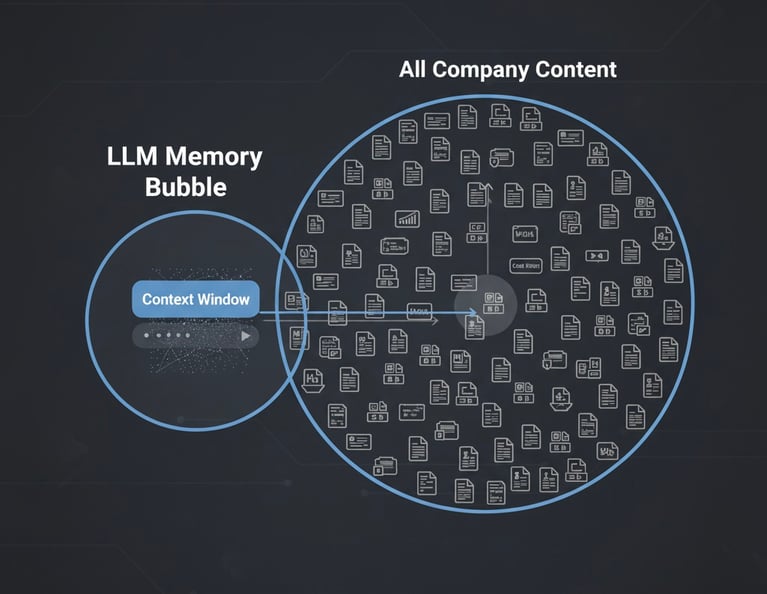

The Problem: AI Has No Memory

Large Language Models (LLMs) are very powerful, but they can’t answer questions about your specific business content—like your website or documents—very well.

Why? Because you can’t simply push all of that into the model’s “working memory.” LLMs process information inside a limited context window, and you can’t fit an entire website or document library in there.

We wanted to solve this problem for a HubSpot CMS website—building an AI-powered site search and chatbot that could respond strictly from the company’s own content.

The approach we used is called Retrieval-Augmented Generation (RAG).

How RAG Solves the Memory Problem

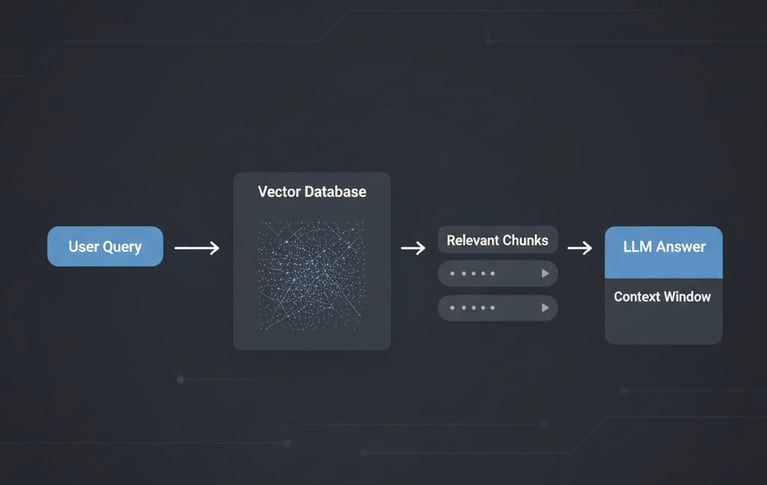

When you interact with AI, all your questions and information must fit into its context window. Good context leads to better responses. However, it’s not possible to add large amounts of data, like thousands of web pages or articles, every time. This is where RAG helps.

Step 1: Store Content in a Special Way

Instead of saving your content as plain text, we use vectors—a method that helps capture the meaning of the information in a database. This works like a librarian who understands the ideas in each book, making it easy to find relevant information, even if you use different words.

Step 2: Retrieve the Right Pieces

When a visitor asks a question, the system queries the vector database. Instead of grabbing everything, it pulls back just the most relevant snippets—the 3–10 chunks that best match the intent behind the question.

Step 3: Build the Context

Those snippets are then placed into the context window alongside the user’s query. Now, the LLM has the exact background it needs to generate a meaningful, grounded answer.

Step 4: Generate the Response

Finally, the model produces an answer that combines the user’s question with the retrieved content. The output isn’t a random internet guess—it’s based specifically on your company’s materials.

Why RAG Feels Like “Company Memory”

The beauty of RAG is that it makes it seem like the AI has access to your entire knowledge base. In reality, it’s just really good at:

- Finding the right documents quickly

- Condensing them into context

- Answering as if it “knew” them all along

Phase 1 & 2: Transforming HubSpot into an AI-Driven Sales Engine

Phase 1: Putting RAG to Work on HubSpot CMS

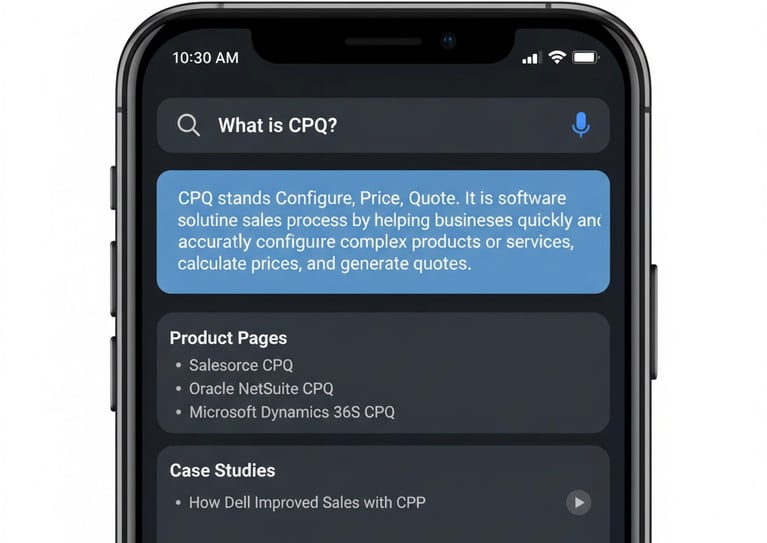

For our client’s HubSpot site, Phase 1 of RAG integration is already live. Here’s what happens now when someone searches or chats

- The AI fetches the most relevant chunks of content from across the site (pages, blogs, HubDB, resources).

- It generates a summary answer to the query in plain language.

- It presents supporting materials section by section—e.g., related product pages, blogs, case studies, and videos.

This not only helps visitors get answers faster but also surfaces the right sales content at the right time.

Phase 2 : What’s Next

Phase 2 will make the system more intelligent by adding:

- Intent detection: Classify queries as product, ROI, knowledge, or comparison.

- Result prioritization: If it’s ROI-focused, push case studies higher; if it’s product-focused, show product pages first.

- Conversation memory: Multi-turn chats that remember earlier context (“Tell me about CPQ” → “Now show me ROI”).

This will transform search into a true AI sales agent, guiding visitors more strategically than static navigation ever could.

How this helps with Sales

AI-powered search is no longer just a convenience feature—it’s a sales accelerator

- Product queries highlight product pages.

- ROI questions bring up case studies.

- Knowledge queries surface blogs and explainers.

Every interaction guides the prospect deeper into relevant resources, ensuring your website works as an always-on sales rep.

Phase 1 turned a HubSpot site into an AI-powered search and sales tool. Phase 2 will add intelligence, memory, and personalization—bringing us closer to a future where your website isn’t just a brochure, but an AI-driven sales partner.